editorially independent. We may make money when you click on links

to our partners.

Learn More

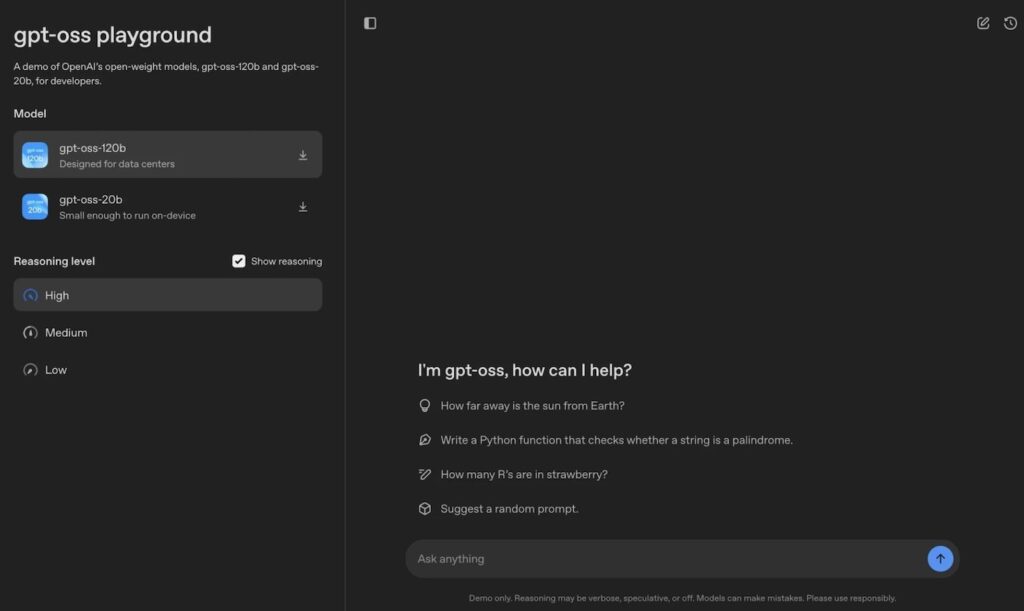

OpenAI has released two new “open” artificial intelligence models, its first since GPT-2 in 2019. The smaller of the two, gpt-oss-20b, has 21 billion parameters and is similar in performance to o3-mini; plus, it only needs 16GB of memory, so it can be run on a consumer laptop or powerful phone. The larger, gpt-oss-120b, has 117 billion parameters, can run on a single NVIDIA GPU, and is comparable in performance to o4-mini.

In OpenAI’s Aug. 5 press release, the company says the models are able to complete advanced reasoning tasks, write code, search the web, and build autonomous agents that act on the user’s behalf, such as with the company’s Responses API. The new AI models have been trained on a text-only dataset, so they won’t be able to process or generate images, audio, or other media types.

In CEO Sam Altman’s X post about gpt-oss, he wrote: “We’re excited to make this model, the result of billions of dollars of research, available to the world to get AI into the hands of the most people possible. We believe far more good than bad will come from it; for example, gpt-oss-120b performs about as well as o3 on challenging health issues. We have worked hard to mitigate the most serious safety issues, especially around biosecurity.”

Notably, OpenAI does not refer to its new models as “open source,” but only “open weight.” This is because the definition provided by the Open Source Initiative requires the model to publish its training data, which Sam Altman’s company will not do, likely due to the multiple ongoing lawsuits from copyright holders related to data sourcing. The Open Source Initiative likewise does not recognise Meta’s Llama models as open source because they have commercial restrictions, despite Meta claiming otherwise.

OpenAI’s new models arrive on the heels of DeepSeek’s R1. The Chinese company ruffled the feathers of the top AI players in January as it demonstrated that high-performance, open-source models could be built and deployed at a fraction of the cost.

How OpenAI’s new open-weight models work

Both of OpenAI’s new open models leverage a “mixture of experts” architecture to improve model efficiency. This means only a few specialised parts of the neural network, called “experts,” are activated at a time, with each expert using a subset of the model’s total parameters. OpenAI says gpt-oss-120b only activates 5.1 billion parameters per token.

The open models were also trained using reinforcement learning, where they learn by interacting with simulated environments and receiving feedback in the form of rewards, to improve their future decisions.

Open-weight models are often considered riskier than closed systems because they can be modified and redistributed without oversight, making misuse more challenging to contain and control. OpenAI’s two open weight models, however, were tested with intentionally harmful fine-tuning and were found not to reach dangerous capability thresholds in areas like bioweapons development, a concern OpenAI has openly acknowledged.

OpenAI’s gpt-oss-120b and gpt-oss-20b are available today on over a dozen platforms, including Hugging Face, Cloudflare, Azure, and AWS, and are licensed under the Apache 2.0 license, allowing for free use, modification, and redistribution, including for commercial purposes.

OpenAI is open by name and by nature, again

Although its name suggests differently, OpenAI has kept its models closed source in recent years; this means the company does not release the underlying model weights or training data to the public, so it can monetise them through paid APIs, enterprise deals, and platform partnerships. This approach has played a key role in OpenAI’s pursuit of a reported $500 billion valuation.

However, a number of events have led OpenAI to hit the brakes on its commercial ambitions. For one, DeepSeek and other Chinese AI companies are releasing open models that match or exceed OpenAI’s offerings in size and performance. Meta intended to also be a competitor in this area, but its Llama models have been underwhelming.

In a Reddit Ask Me Anything session early this year, Altman admitted OpenAI had “been on the wrong side of history” by not releasing its model weights.

Furthermore, last month, US President Donald Trump specifically endorsed open-source and open-weight AI models in his AI Action Plan. He argued they provide “geostrategic value” by helping US technology become global standards and supporting academic research and start-ups.

“Our open-weights models are designed to meet that moment: to align with US policy priorities that have broad support across the political spectrum while giving developers everywhere the freedom to innovate on their own terms,” OpenAI wrote in a blog.

Comparison of AI models’ performance in various benchmarks

| Benchmark name | What the AI model is tested on | gpt-oss-120b | gpt-oss-20b | o3 | o4-mini | DeepSeek-R1 | Llama 4 Maverick | Gemini 2.5 Pro |

| Humanity’s Last Exam (HLE) | 2,500 multimodal questions across a broad range of subjects | 19% (with tools) | 17.3% (with tools) | 24.9% (with tools) | 17.7% (with tools) | 8.5% (evaluated on text only as not multimodal) | 5.7% | 21.6% |

| Codeforces Elo | Programming problems from Codeforces contests | 2622 (with tools) | 2516 (with tools) | 2516 | 2706 | 2029 | 1417 | – |

| American Invitational Mathematics Examination (AIME) 2025 | 15 questions from a famously tough high school math contest | 97.9% | 98.7% | 98.4% | 99.5% | 70% | 19.3% | 86.7% |

| PersonQA hallucinations | The rate at which the model hallucinates when asked questions about people | 49% | 53% | – | 36% | – | – | – |

On the higher hallucination rate, OpenAI said in its model card, “This is expected, as smaller models have less world knowledge than larger frontier models and tend to hallucinate more.”

Editor’s note: This article was originally published on our sister site TechRepublic.